rêve v0.1 (devlog part 1)

rêve is an interactive software where the user can explore an audio/visual landscape.

It’s been a while since I wanted to do something like this, at the edge of a video game, instrument and a piece of real time software.

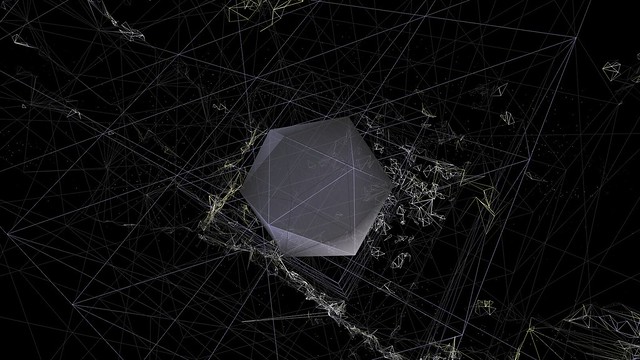

The concept is based on the dream, like in real life the user is unable to control the direction of his own dream. That’s why the program is built upon pseudo-random rules, constantly switching between two types of environment.

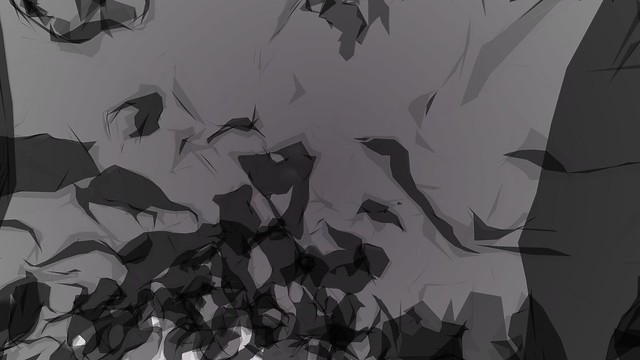

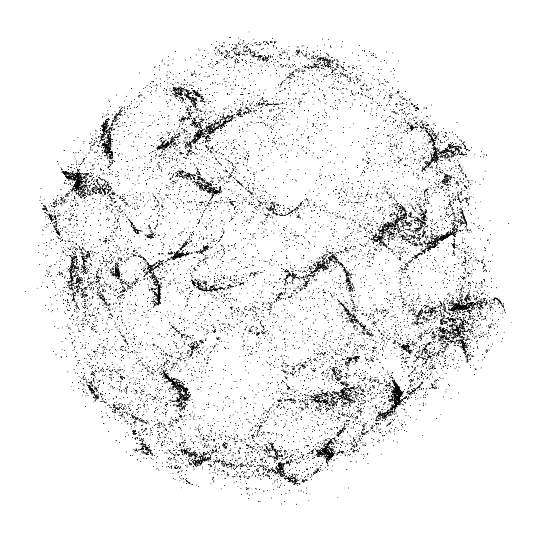

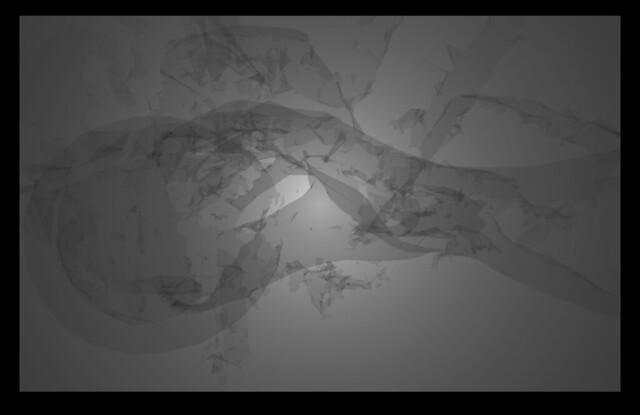

The first one happens to be a kinect 3D scan of my own arm with my hand holding a ball. Everything in the first environment happens inside the 3D model of my arm towards the ball, which is moving forward on the z-axis at a slightly faster speed than the camera, thus giving the impression of diving into a tunnel.

In order to let the program make the switch by itself, a random number between 0 and 60 is chosen at the end of each elapsed minute. This number corresponds to the moment when the change of environnement is going to happen.

Based on this random number, each environnement may have alternative realities, depending on this value some sounds, models and textures may or may not be loaded. The main core of the program is this following set of conditionnal operators that drive all of the underlying events.

|

|

As for the sound part, a total of 10 samples are loaded directly in oF and triggered accordingly to the random number that’s chosen every minute. The output of each sample is mapped to models parameters such as colors, radius, vertices, scale, lights and even camera depth position.

|

|

The sound amplitude is mapped to the horizontal screen size and the frequency corresponds to the vertical size of the screen. As the sound changes with the mouse interaction, each exploration will never sound the same.